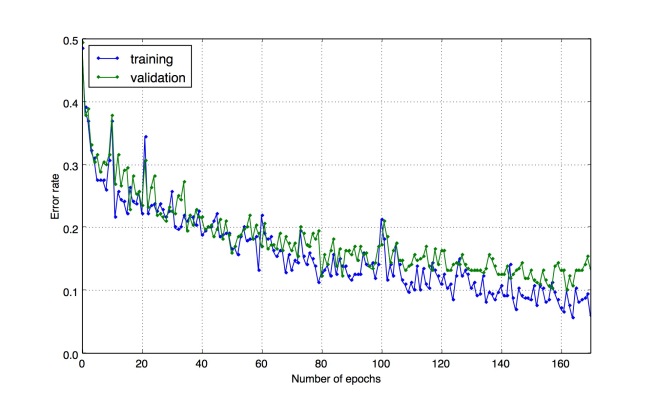

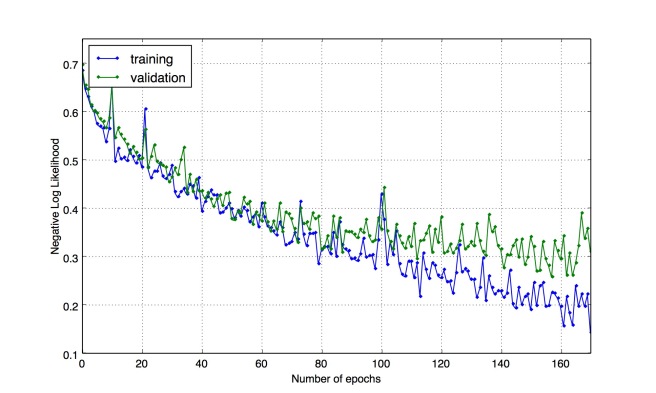

After training a network with with two conv/pool layers, a fully connected layer and the output layer, it was quite natural to try deeper architectures. Guillaume and Iulian already managed to get respectively around 85% and 79% of of testing accuracy with 3-4 conv/pooling layers. Following the trend, I tried deeper architectures as well. I first tried 5 conv/pooling layers and obtained a testing score of around 87% after ~100 epochs. Then I tried a network with 6 conv/pooling layers (without zero padding). The resulting 8-layer deep architecture reached a testing accuracy of 88.9% after ~170 epochs.

Vincent’s script returned:

Test error rate is 0.112

Valid error rate is 0.1068

Train error rate is 0.0598

To choose the maximal number of convolutions and pooling layers, I started from the size of the representation I wanted to get, 256, and I designed the layers by going backwards, towards the inputs (like that, you don’t have to divide odd numbers by 2…). I ended up with the following architecture (in the right order):

– dim (260, 260, 3) kernel (5,5) feature maps 32

– dim (256, 256, 32) pooling (2,2)

– dim (128, 128, 32) kernel (5,5) feature maps 32

– dim (124, 124, 32) pooling (2,2)

– dim (62, 62, 32) kernel (5,5) feature maps 64

– dim (58, 58, 64) pooling (2,2)

– dim (29, 29, 64) kernel (4,4) feature maps 64

– dim (26, 26, 64) pooling (2,2)

– dim (13, 13, 64) kernel (4,4) feature maps 128

– dim (10, 10, 128) pooling (2,2)

– dim (5, 5, 128) kernel (4,4) feature maps 256

– dim (2, 2, 256) pooling (2,2)

– dim (1, 1, 256) fully connected 256 neurons

– dim (1, 1, 256) fully connected 256 neurons

– two output neurons (I discussed in earlier posts the option to take one or two output neurons)

It might be possible to have even more conv/pooling layers either by allowing zero padding, or taking smaller kernels, or removing pooling layers, or taking larger input images…

Weights were initialized uniformly between -0.1 and 0.1. For a while I was not able to properly train my models due to choosing too small initial weights (the output of the network was always 0.5).

I chose input images of 260×260, a bit bigger than the default 221×221 because we should probably keep as much information as possible. There are more and more feature maps as we go deeper to compensate the loss of neurons per feature maps. The size of the representation is always decreasing, starting from 260x260x3 and ending to 256. The first 3 kernels are 5×5 and the last 3 are 4×4. As we go deeper, the neurons have larger receptive fields so we may need smaller kernels. My previous models were not overfitting (not much at least), so I could increase their capacity and it is what I did here: I chose larger feature maps (64, 128, 256) and still the same number of hidden neurons (256). As expected this last model overfits more than my previous models (train error of 0.0598) .

I chose a fixed learning rate of 1e-3 but by looking at the jittering of the training error/nll, I think I could have chosen a smaller learning learning rate. Like Guillaume I chose a momentum of 0.1 but I have not tuned it.

I was quite surprised to see that Guillaume’s and Iulian’s architecture were quite small (32, 16 and 16 feature maps and a fully connected of only 16 neurons for Guillaume!) but still perform very well. By comparison my architecture is much bigger. May look a bit overpriced!

What’s next?

– I will now start working on data augmentation: distort images on the fly (linear and non linear transformations). I will probably evaluate this data augmentation on smaller models to save time. If it improves smaller models, it will likely improve biggers as well.

– I might also try even deeper architectures.

– I may also start working on rectangular inputs or variable size inputs to exploit more information.

Alexandre, congratulations – your results are impressive! No doubt, having a deep network improves performance! I’m also impressed that you manage to train it in so few epochs.

I will be very curious to see how you use data augmentation in your next update…

LikeLiked by 1 person

Thanks Julian, actually one epoch in my setup corresponds to one whole cycle over the training data (I think both Guillaume and you are only scanning a small portion per epoch). I have had a look at your last experiment, I am quite surprised too that small rotations don’t improve, even slightly, the performance…

LikeLike

Very nice results (and post!) I see that in general people tend to put many conv&pooling layers.

Do you know if in general it is recommended to add so many of them and ending up with an output of size 1×1 (as in your case)?

If not, maybe it’s a question for the course’s staff..

Thanks!

LikeLike

Yes, it seems that the current trend in computer vision is to have more and more layers (for imagenet14, GoogleNet had 22 layers and Oxford net 19). I wanted to have a large number of conv/pool layers, that’s why I ended up with 1×1. Note that the receptive fields of this layer with 1×1 feature maps are the whole image.

LikeLiked by 1 person

thanks for the reply, but i was thinking.. do they pad somehow smart or just slowly loose half size size at each conv+pool?

LikeLiked by 1 person

Yes I think all the best imagenet networks (Googlenet, oxford net, Alex net) uses some kind of padding. But I don’t have enough experience to say how much it helps.

LikeLike

Congrats, Alexandre!

I had the same issue with network output always being 0.5. Your post was quite helpful.

Thanks.

LikeLiked by 1 person